Examples

Basic Examples (1)

Obtain the benchmark data:

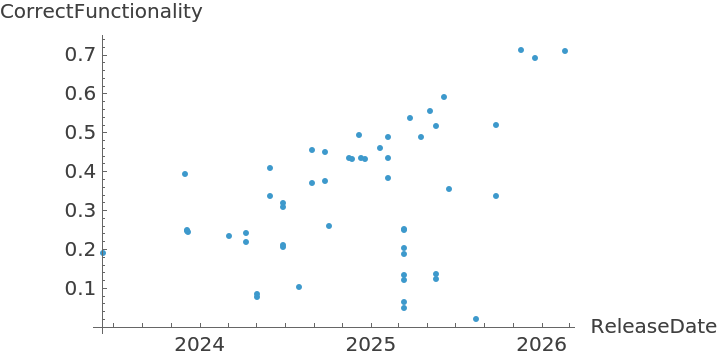

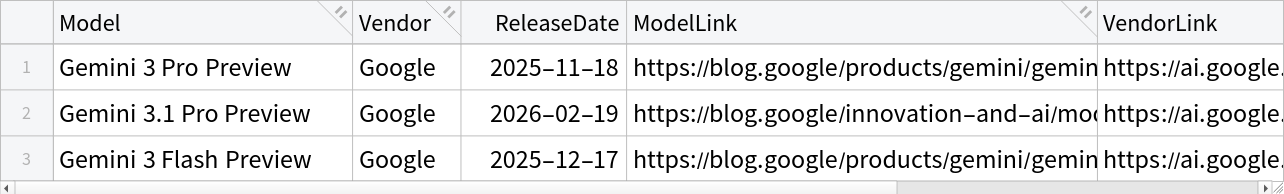

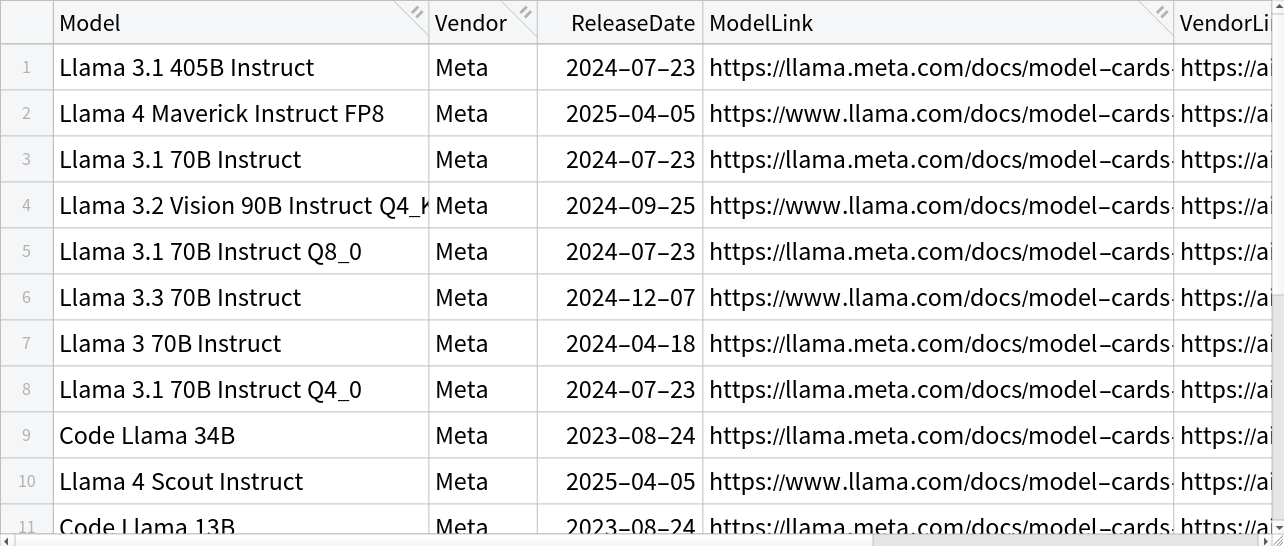

Visualizations (3)

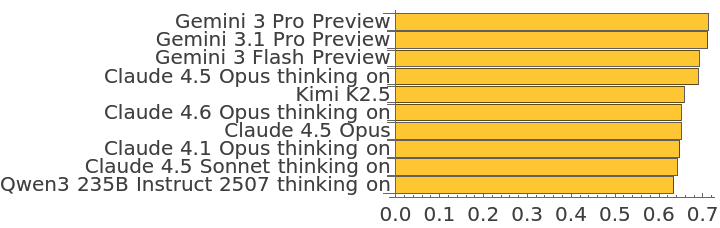

Display a bar chart with the top 10 models:

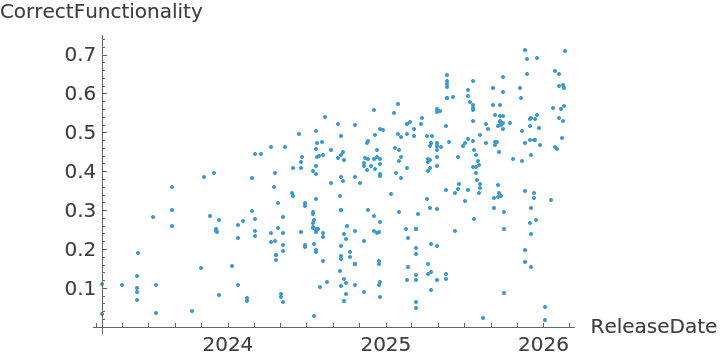

Display all the correct functionality results over time:

Display all the correct functionality results for Google models over time:

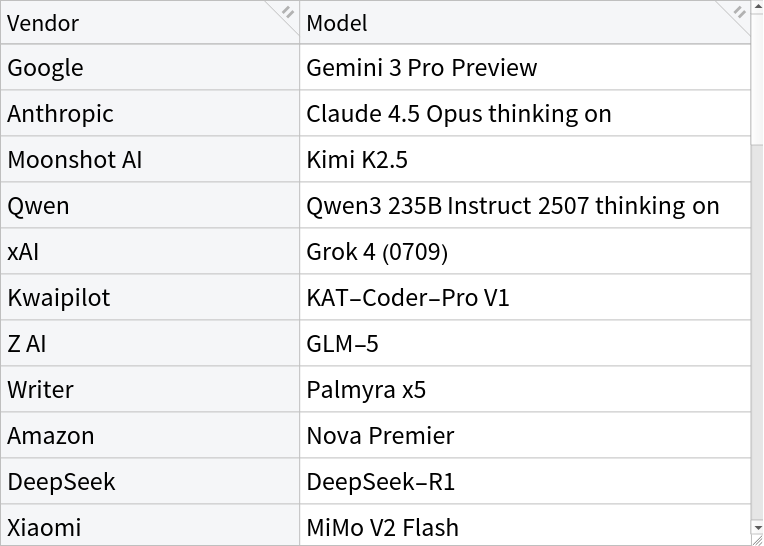

Analysis (4)

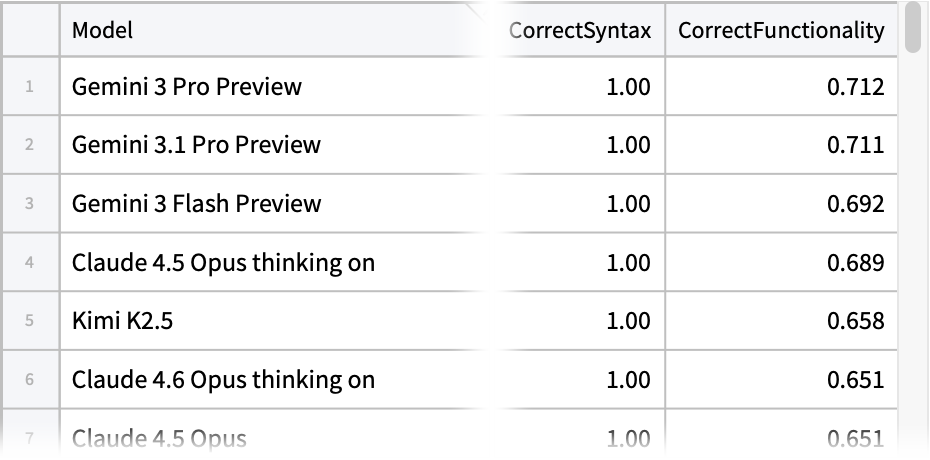

Get the top three models by code generation correctness:

Select all models from Meta:

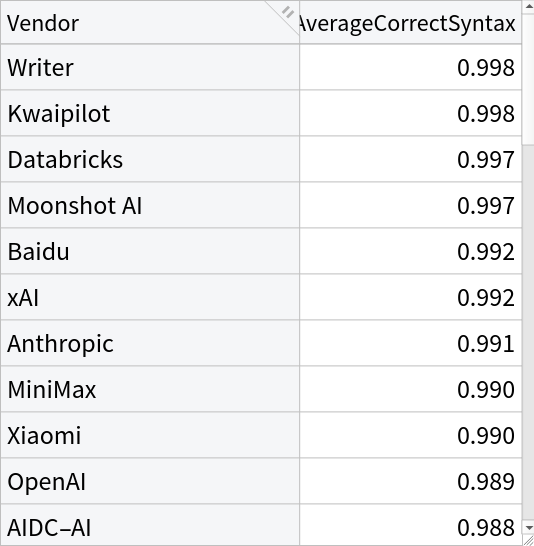

Select the top model for each vendor:

Sort the vendors by their average model score on generating valid Wolfram Language syntax:

External Links

Bibliographic Citation

Wolfram Research,

"LLMBenchmarks Data"

from the Wolfram Data Repository

(2026)

Data Resource History

Data Downloads

Publisher Information

![BarChart[

ConstructColumns[

"value" -> Function[Labeled[#CorrectFunctionality, #Model]]]@

SortBy["CorrectFunctionality"]@MaximalBy[ResourceData[\!\(\*

TagBox["\"\<LLMBenchmarks Data\>\"",

#& ,

BoxID -> "ResourceTag-LLMBenchmarks Data-Input",

AutoDelete->True]\)], "CorrectFunctionality", 10] -> "value", BarOrigin -> Left]](https://www.wolframcloud.com/obj/resourcesystem/images/9fb/9fb6826d-bb0d-47f1-844d-d6c12b78ae88/5850c4825fb4dfb1.png)

![ListPlot[ResourceData[\!\(\*

TagBox["\"\<LLMBenchmarks Data\>\"",

#& ,

BoxID -> "ResourceTag-LLMBenchmarks Data-Input",

AutoDelete->True]\)] -> {"ReleaseDate", "CorrectFunctionality"}, AxesLabel -> {"ReleaseDate", "CorrectFunctionality"}]](https://www.wolframcloud.com/obj/resourcesystem/images/9fb/9fb6826d-bb0d-47f1-844d-d6c12b78ae88/669bb24fbccd74e1.png)

![ListPlot[Select[#Vendor == "Google" &]@ResourceData[\!\(\*

TagBox["\"\<LLMBenchmarks Data\>\"",

#& ,

BoxID -> "ResourceTag-LLMBenchmarks Data-Input",

AutoDelete->True]\)] -> {"ReleaseDate", "CorrectFunctionality"}, AxesLabel -> {"ReleaseDate", "CorrectFunctionality"}]](https://www.wolframcloud.com/obj/resourcesystem/images/9fb/9fb6826d-bb0d-47f1-844d-d6c12b78ae88/5bf3566cbb126cb0.png)

![AggregateRows[ResourceData[\!\(\*

TagBox["\"\<LLMBenchmarks Data\>\"",

#& ,

BoxID -> "ResourceTag-LLMBenchmarks Data-Input",

AutoDelete->True]\)], "Model" -> Function[First@#Model], "Vendor"]](https://www.wolframcloud.com/obj/resourcesystem/images/9fb/9fb6826d-bb0d-47f1-844d-d6c12b78ae88/26d194917254bac7.png)

![AggregateRows[ResourceData[\!\(\*

TagBox["\"\<LLMBenchmarks Data\>\"",

#& ,

BoxID -> "ResourceTag-LLMBenchmarks Data-Input",

AutoDelete->True]\)], "AverageCorrectSyntax" -> Function[Mean[#CorrectSyntax]], "Vendor"] // ReverseSortBy["AverageCorrectSyntax"]](https://www.wolframcloud.com/obj/resourcesystem/images/9fb/9fb6826d-bb0d-47f1-844d-d6c12b78ae88/3c8545be136b85c1.png)