A large-scale dataset of 44k natural language processing problems, inspired by the original Winograd Schema Challenge design

Examples

Basic Examples (3)

Get the WinoGrande dataset:

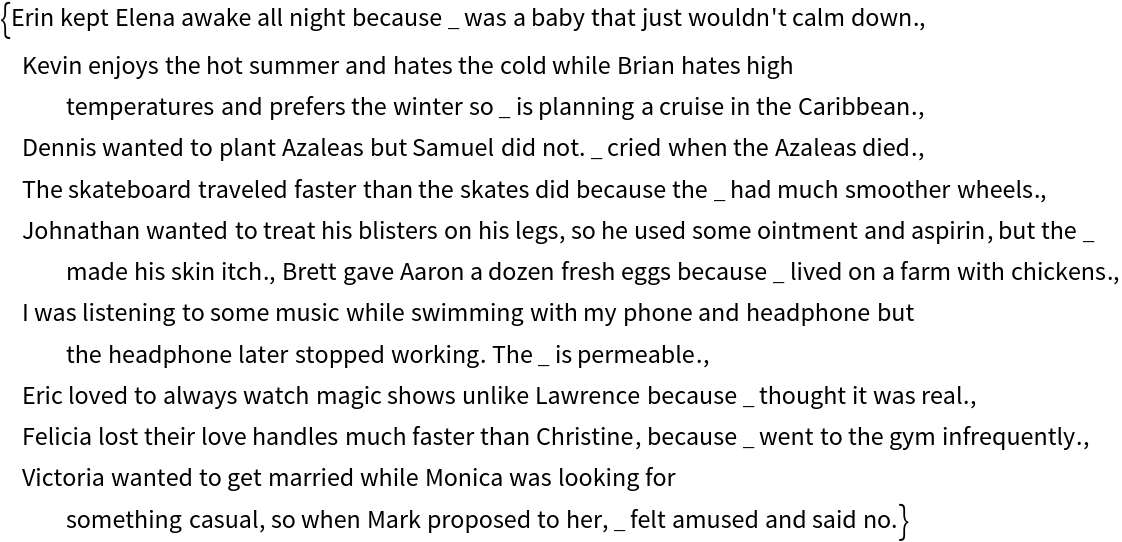

A sample row:

Number of items in the dataset:

Get a random WinoGrande problem:

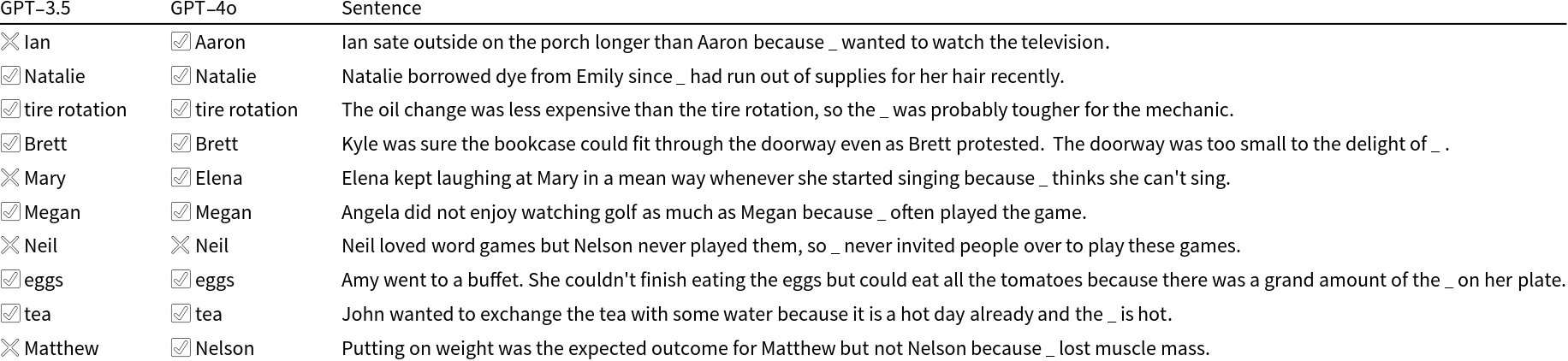

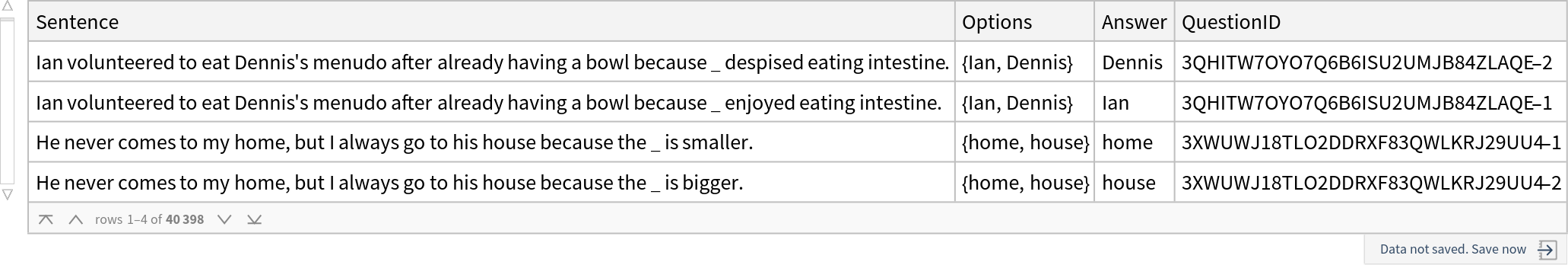

Test an LLM with the problem:

Verify:

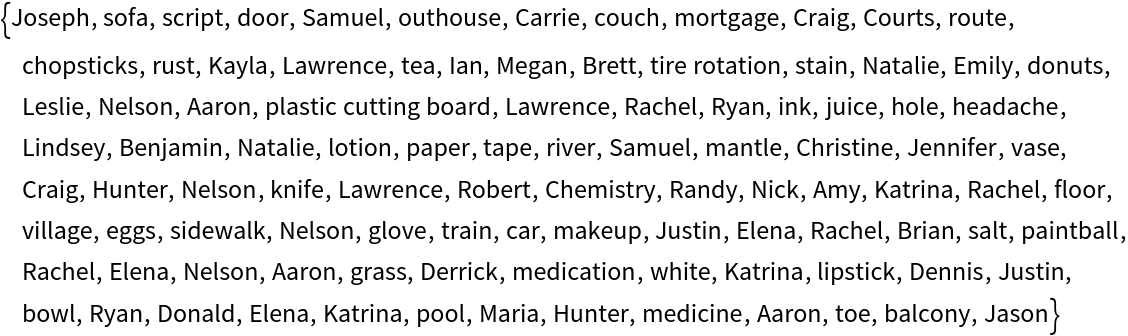

Get a random sample of problems:

Each sentence contains a "_" which is a blank that's meant to be filled in:

Each problem gives a set of multiple choice options:

The correct answer:

Scope & Additional Elements (2)

Get a larger version of the WinoGrande dataset:

Get a test version of the WinoGrande dataset:

Analysis (5)

Get a random sample of WinoGrande questions:

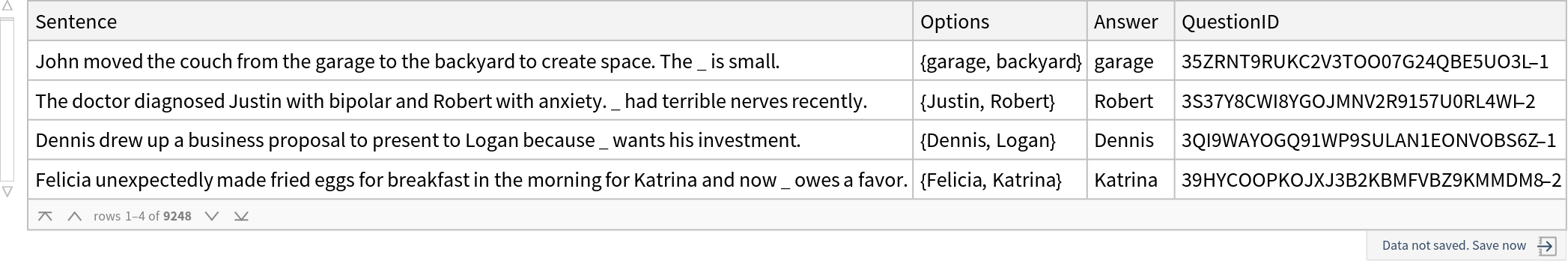

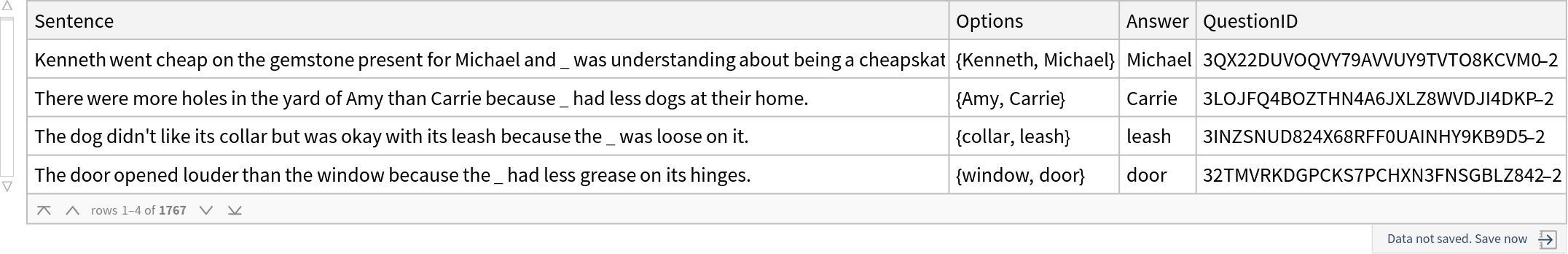

Check results using an older LLM:

Compare with a more modern model:

Much better performance:

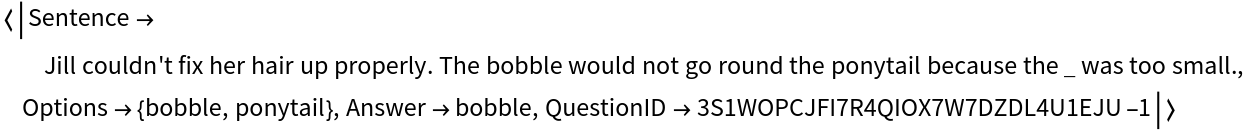

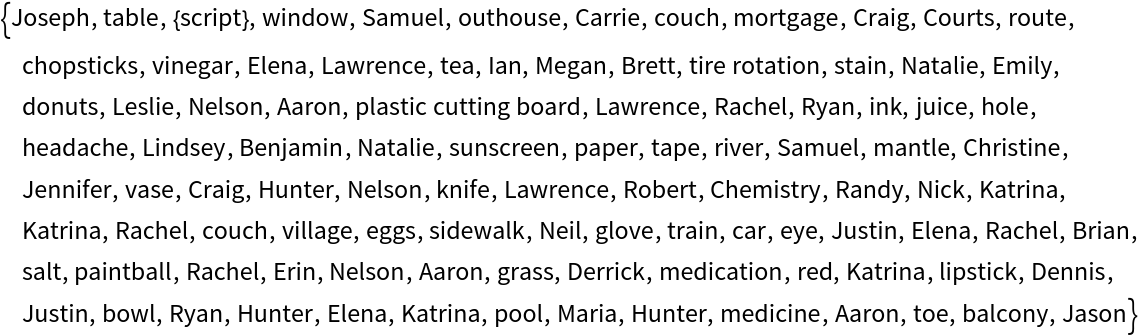

View a table comparing a sample of results:

External Links

Bibliographic Citation

Wolfram Research,

"WinoGrande"

from the Wolfram Data Repository

(2024)

CC-BY

Data Resource History

Publisher Information

![LLMFunction[

"In the sentence below, which option does _ correspond to? Reply only with one of the specified options and nothing else.

`Sentence`

Available options:

`Options`"][q]](https://www.wolframcloud.com/obj/resourcesystem/images/b45/b45b7aa4-e3c3-41c5-8b28-31295d9c65f6/00e2b56553e850c2.png)

![text = StringTemplate[

"In the sentence below, which option does _ correspond to? Reply only with one of the specified options and nothing else.

`Sentence`

Available options:

`Options`"] /@ q;](https://www.wolframcloud.com/obj/resourcesystem/images/b45/b45b7aa4-e3c3-41c5-8b28-31295d9c65f6/7b085aa97e4c57bc.png)

![Style[TableForm[

RandomSample[

Transpose[{MapThread[

If[#1 === #2, "✅ " <> #1, "❌ " <> #1] &, {answers1, correct}], MapThread[

If[#1 === #2, "✅ " <> #1, "❌ " <> #1] &, {answers2, correct}], q[[All, "Sentence"]]}], 10], TableHeadings -> {None, {"GPT-3.5", "GPT-4o", "Sentence"}}], "Text",

FontSize -> 12]](https://www.wolframcloud.com/obj/resourcesystem/images/b45/b45b7aa4-e3c3-41c5-8b28-31295d9c65f6/7129cf29a153f433.png)